Mint.com provides a free service, which is able with your authorization, to connect to your bank(s), retrieve your bank account information and all your transactions and provide value-add services with the data. In particular, it allows you to see where you money is spent and give you hints at how you could save money. I personally think it is a superbly designed UI to the user data held at banks, which shows how much value there is to unlock, and also how much startups can be so much more efficient at delivering innovating services than banks themselves sometimes. Yodlee, a partner of Mint.com, was a dot com era example of this and Mint.com might be their Web 2.0 equivalent.

One problem is: Mint.com requires you to provide your actual username and password to the online banking service provided by your bank, which you use not just to view transactions, but also to make payments, transfers, etc.This approach has several drawbacks. One is that the user can be legitimately concerned about what would happen if this information would get compromised. Right now, Mint.com reassures its customers by saying: “We don’t store your info, Yodlee does”.

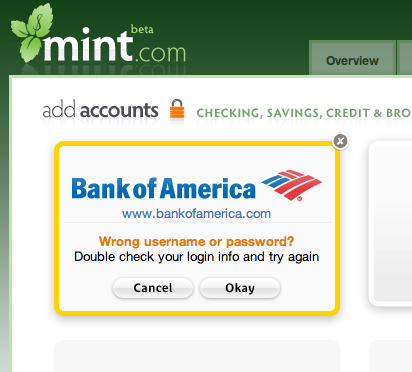

Another problem is that every time your online banking username or password changes, Mint.com stops working until you reconfigure it:

Not very convenient. Add to this the fact that you can’t have any guarantee of what happens to your username/password when you want to terminate your relationship with Mint.com/Yodlee, and you may feel like you won’t use this application for now.

An implementation of OAuth protocol between Yodlee/Mint.com, the user and the bank can solve these problems at once, and would certainly further drive user adoption.

OAuth is self-described as a protocol for “secure API authentication”, but a better way to put it is that OAuth is a way for users to grant controlled access to their data hosted at online service A to another online service B. To use the car metaphor, if your data at online service A was a car, and online service B was the valet parking person, OAuth would be the way for the valet parking person to ask you for your car’s valet key, with which he can only drive a few miles and can’t open the trunk, and the way for you to give him the key. In security jargon, OAuth allows to delegate capabilities on your data to other applications, in the form of signed tokens, i.e. authorizations to do specific things with specific data that you sign with your identity. The beauty of it is: because these capabilities are signed by you, it can be presented by online service B to access your data at online service A without you having to provide your identity credentials (username/password typically) to online service B.

Coming back to the Mint.com/Yodlee use case, here is how it would work:

- The user would go to Mint.com to request access to his data at the bank.

- Mint.com would request his bank a token for a specific capability, for instance, retrieving transaction data

- Upon receiving this request token, Mint.com would redirect the user to the bank’s token authorization page.

- The user then authorizes the token (If he is not already logged in, he would do that first)

- Mint.com can then substitutes the request token with the access token, and access the user’s data as they requested and as the user authorized, until the token is invalidated or expires.

Here are the benefits of OAuth for the user in this use case:

- Mint.com/Yodlee never know the username/password used to log in at the bank.

- When the user changes his username/password, Mint.com/Yodlee can still retrieves transaction data

- When the user decides to terminate the relationship with Mint.com/Yodlee, he knows they don’t have is username/password and he knows that they can’t access his data anymore.

- When you don’t want to use Mint.com/Yodlee anymore, you can simply invalidate

The big question is: how much work would be involved at banks and Yodlee to support OAuth, and in particular, what would they have to change?